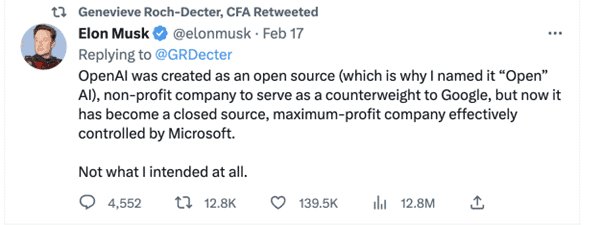

Elon Musk let the cat out of the bag when he declared that he did not envisage OpenAI to be as closed as it is and controlled by a single entity Microsoft – in as many words. In his typical style Musk called out Microsoft which owns 49% of OpenAI, an initiative of Musk but funded by Microsoft, Reid Hoffman’s charitable foundation and Khosla Ventures.

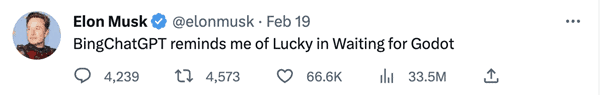

And then he followed it up with another sling at Microsoft

While it’s not sure if Musk wants to champion another true Open OpenAI clone, what is certain is that there is a paradigm shift that has swiftly taken over with the public release of ChatGPT.

ChatGPT has successfully reset the tech clock and has unsettled many cozy research teams’ dreams. Google for one known for its deliberate product releases, taking time without being rushed was forced to release Bard, its answer to new Bing with ChatGPT, hurriedly which gave a wrong answer to a question in a demo video that promptly brought its stock price down considerably. Reuters reported, “Alphabet shares slid as much as 9% during regular trading with volumes nearly three times the 50-day moving average.”

Meanwhile, Microsoft has reported record installs of its new Bing search powered by ChatGPT and it is struggling to keep up with the volume of questions being asked by people around the world that it is now restricting the number of questions per day per person to about 50.

What Are the On-ground Changes?

In a way, ChatGPT set the tone for a new era in technology. ChatGPT has given rise to instant experts on AI overnight. Everyone and his neighbor now seem to know a lot more about AI, neural networks, deep learning, training data and of course the lowly prompt.

Speaking of prompts, writing a perfect prompt to get a perfect response from your neural network, be it ChatGPT or any other public and home grown networks, is going to be a much sought after skill set. A testimony to this is a recent post by a Wharton professor who wanted his students to use ChatGPT for an assignment.

Quite evidently, every software maker, every SaaS platform provider, not to mention the E-Comm players will build in either OpenAI 3.x+ or some other Attention based Transformers into their products. Microsoft’s Satya Nadella has already made it clear that the Office 365 Suite will be powered by ChatGPT and Bing is the first of the Microsoft products to adopt it. He famously said, “We wanted them [Google] to come out and dance”, taking an oblique swipe at the search giant which could not be dethroned for decades now.

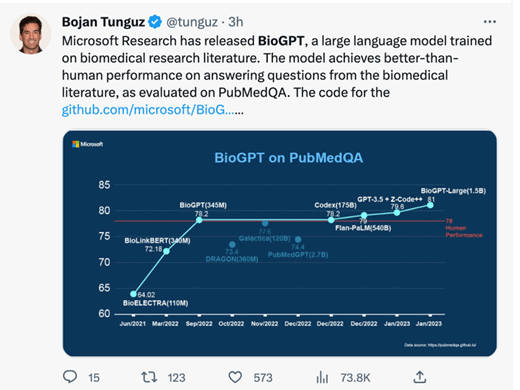

Other industry players like the aircraft manufacturers, mining companies, oil giants, automobile companies, pharma and biotech industries to name just a few will eventually add Attention based transformers to their domains and that’s where the big boom is expected to arise in the near future. And looks like Pharma is already in.

Software 2.0

Just as we are peering into the hole to watch for clues to the future, there seems to be a new era of software development rising up the horizon. A glimpse of this was written up almost 7 years back by Andrej Karpathy, an AI researcher originally from Tesla who is now working for OpenAI, the parent of ChatGPT describing the contours of Software 2.0. And make no mistake the humble “prompt” specialist will be the much sought after gladiator and if he has some statistical knowledge then he will be next to God. Here’s an excerpt from Karpathy’s blog:

The “classical stack” of Software 1.0 is what we’re all familiar with — it is written in languages such as Python, C++, etc. It consists of explicit instructions to the computer written by a programmer. By writing each line of code, the programmer identifies a specific point in program space with some desirable behavior.

In contrast, Software 2.0 is written in much more abstract, human unfriendly language, such as the weights of a neural network. No human is involved in writing this code because there are a lot of weights (typical networks might have millions), and coding directly in weights is kind of hard (I tried).

There is much skepticism about an AI/ML machine writing code which humans cannot really understand. And there are suggestions of applying Formal Specifications more rigorously so that a piece of software can be proved to be correct. One such strong suggestion comes from James King, a software developer who works in formal specifications especially TLA+, specification language. So, what was once primarily an academic pursuit, formal specifications and program correctness, may finally take their place in the Sun.

One interesting thing to observe is that we haven’t heard much from Meta, formerly Facebook and its own AI legend Yann Lecun, except to his mild criticism of releasing something like ChatGPT which does not serve a wider purpose other than entertaining people.

Well, ChatGPT may not be the AI we are looking at but it has shown a new way of making things work. The world has changed as we know it because we have now understood that “Attention is all you need”.